Privacy v. Utility: Ethics Lab team facilitates a data ethics workshop for experts at Harvard University

Update: Differential Privacy & the Census in the New York Times

Our team just got back from leading a workshop at Harvard University on data privacy. It was exciting to apply our tools and methods of working to a project that is so direly important in today’s world: how should we preserve privacy in the era of Big Data?

Even anonymization of data does not protect privacy, especially when very large datasets are aggregated and combined. The group of computer scientists we worked with developed formal tools that help ensure that no individuals can be personally identified from datasets, by introducing selected noise into the system. Of course, noise comes at a cost: it reduces the informational utility one can get out of the datasets.

We came in to guide conversations about how to make decisions on setting the privacy loss parameter—that is, how much privacy for individual data subjects should be traded for how much utility one can get out of a data set? The question emerges from a very technical process, but it also points to a set of ethical questions that might inspire innovative thinking.

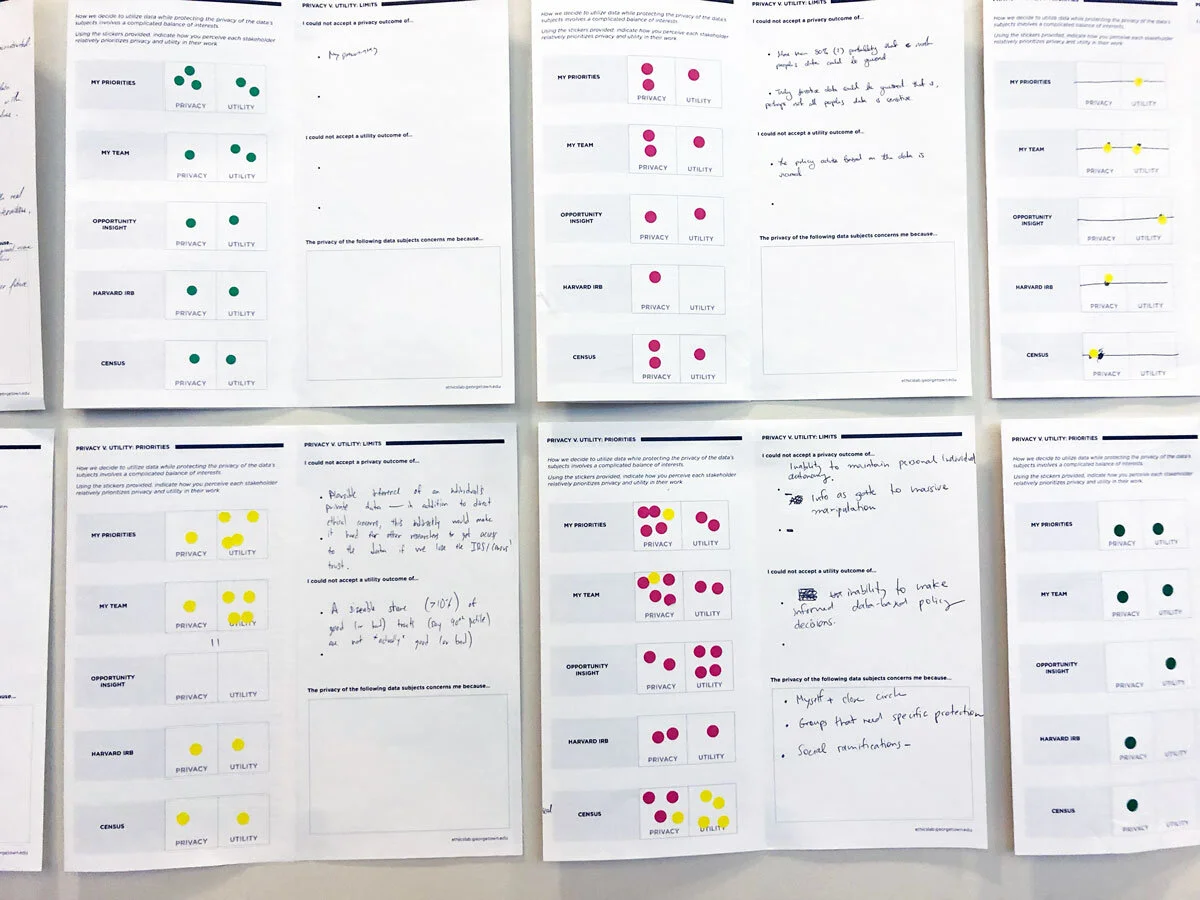

We led a series of exercises designed to elicit the researchers’ own tacit understanding of the value of privacy versus informational utility, the types of considerations that the algorithm can protect (or not), and what kinds of considerations different stakeholders throughout the problem landscape might value in relation to a data-research project that proposed to use this algorithmic solution.

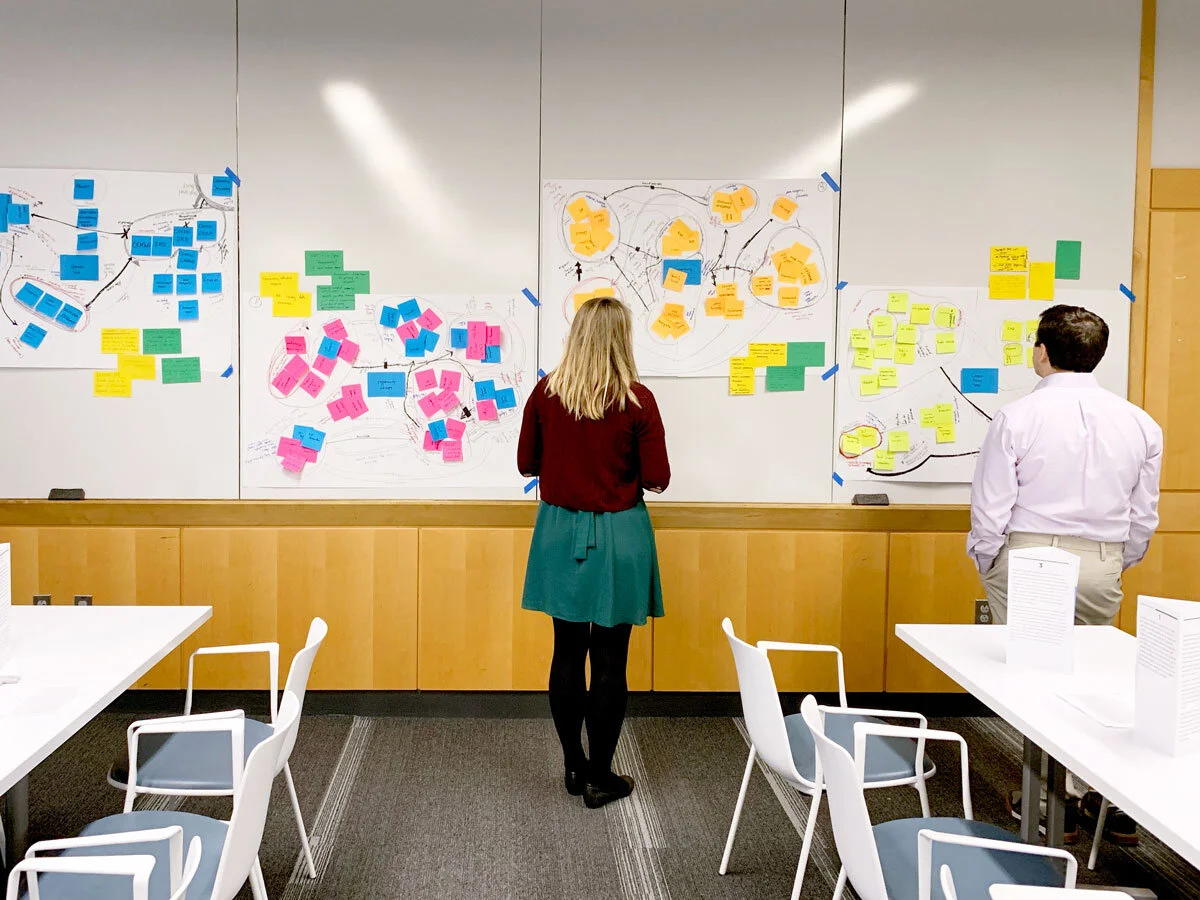

A series of fast-paced, collaborative exercises enabled the research team to recognize the variety of assumptions and perspectives among their own group. Participants were guided through a brief reflection on how the situation of their own work informed their assessment of privacy.

Through Ethics Lab’s “moral landscape” activity, mathematically-focused computer scientists, policy-minded social scientists, and legal scholars drew out competing notions of vulnerability and responsibility from different stakeholders’ perspectives. Through this visualization, the binary tradeoff between privacy and utility was better understood as a negotiation, and inspired ideas for communicating the tools’ effects more clearly to promote informed adoption.

A highlight for the Ethics Lab team was the “working dinner,” an informal evening surrounded by the work of the first day and organized by a challenge case. We looked at the story of “Jennifer,” who learns her personal information accumulated by an academic study might impact a bank’s determination of her mortgage interest rate in the future—what numeric privacy setting would Jennifer wish the researcher assign in the course of their study? What value would the researcher advocate for? The unexpectedly wide range of responses surprised many—from a single circle around a setting value (suggesting an alignment of values between subject and researcher) to a variety of zig-zagging shapes within the table itself (suggesting that some viewed privacy as a threshold notion that was contextually dependent, rather than a clear number that might be acceptable across situations). Using this case as a prompt, the diverse team of lawyers, computer scientists, social scientists, and philosophers debated why privacy is valuable and how to start to bridge technical and normative conceptions of privacy.