Learning About Average Case Complexity

On January 28, Professor Cal Newport's Introduction to Algorithms class learned about average case complexity and randomized analysis. One of the core examples involved an algorithm for aiding the hiring process that assumed each candidate was assigned a numerical score that indicated their value to the company. The Responsible CS Team developed an activity designed to highlight what may be lost when individuals are reduced to data points and to raise awareness about the choices and values at stake when designing decision support systems. The activity built from the hiring example used in class to teach the techniques, but added the human and social context behind the data points usually considered in building the algorithm. Professors Cal Newport and Elizabeth Edenberg co-led the activity and discussion.

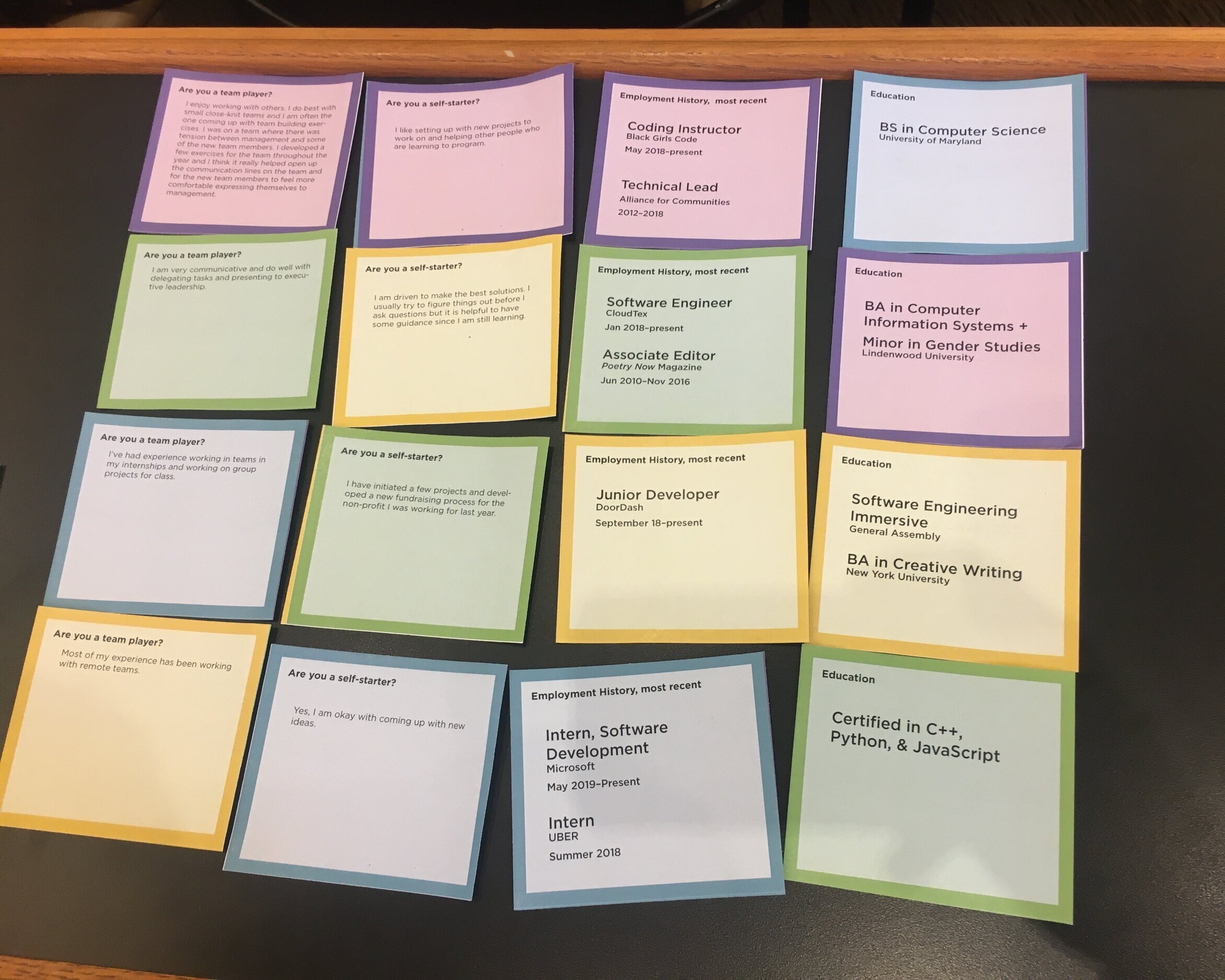

Working in groups of three, students were asked to imagine being members of a hiring committee at Google tasked with selecting a junior level software engineer. Groups were presented with information about four candidates across a range of attributes including education, employment history, leadership potential, collaborative ability, and learning style. Importantly, this information was presented in discrete segments corresponding to each attribute rather than in a cohesive format like a CV. Initially, this information was also anonymized so groups did not know which attributes a given candidate possessed. This partitioning of information allowed groups to systematically rank candidate information from best to worst across the distinct attributes much like an algorithm might. Groups then learned which set of information corresponded to each job candidate and were tasked with ranking the candidates themselves. After deciding upon candidate rankings, groups formed pairs to compare their choices and, more importantly, their rationale behind the ranking. This activity concluded with a class discussion about the importance of keeping the broader social context in view when determining which ranking systems to use for a given task. Because the Responsible CS designs its activities to compliment more traditional course content, this overtly normative discussion was helpfully informed by relevant technical results demonstrating that, even in simpler contexts, it is not always possible to reduce individuals to a totally ordered sequence that clearly indicates person A is “strictly better” than person “B” in the domain in question.

Determining how to reconcile an automated approach with more holistic evaluative concerns took center stage throughout the activity.

Notes from Dr. Newport’s Intro to Algorithms class.

When asked to explain how they determined their final candidate rankings, many groups reported proceeding systematically: assigning numerical scores for ranking first, second, third, or fourth for each of the job attribute categories, and and then aggregating these scores to determine the final rankings mathematically. Despite this mathematical approach, students were surprised to see that there was broad disagreement about which candidate was the best for the job. One group took a different approach. Rather than aggregating the scores in each category with everything weighted equally, they decided to “look at the bigger picture” and “assigned different weights to the categories.”

Seeking to reconcile with automaticity with holistic considerations required students to confront how context can and should inform their judgments about the data they have. When explaining their rankings, some groups appealed to factors such as the apparent honesty and confidence expressed by the candidate while others appealed to the level of detail and relevance of information provided in a candidate’s answers. Professor Edenberg highlighted that such rankings require rich contextual information and careful judgments that are not yet easily implemented by algorithms and that will still require responsible design choices by humans when they are. Building on this concern, Professor Newport urged students to consider how they would realistically implement automated decision support systems in real world contexts today. Many groups reported a preference for a multi-tiered approach, having automated decisions rule out candidates at early stages and leaving human judgment to handle final hiring decisions. Some groups noted a virtue of automation playing some role in the hiring processes is that it may facilitate avoiding certain kinds of bias. Another group observed that unintentional bias may also result from automation and that there was a need to continually evaluate an algorithm’s performance.

This session helped students confront the ways that normative judgments factor into the design and implementation of automated decision systems in ways that are not immediately apparent. It also highlighted the need for computer scientists to approach the design of such systems responsibly. Professor Newport closed the session with a call to action, observing that the challenge of responsibly implementing automated decision systems raises “huge and important questions in both real world and academic contexts” and that the territory is “ripe for new ideas.”